Public Speaking Training

- Specific training scenarios developed by experts: various professional contexts, different audience attitudes, and diverse tasks to perform.

- Easy to access and use: designed for Meta Quest VR headsets in standalone mode. No computer required.

Hand tracking: no need for controllers or joysticks. - Multi-user experience. You can be the trainee or, for more interactions or more complex scenarios, a member of the audience… from anywhere in the world.

- High-quality immersion and a strong sense of presence. Truly immersive environments featuring advanced elements such as photorealistic avatars based on real people, libraries of validated virtual agent behaviors, and artificial intelligence for richer interactions.

- Replay. After your performance, relive it in 3D, this time from the audience’s point of view.

- Interactions with virtual agents through local IA (no data sharing with external companies, low latency...)

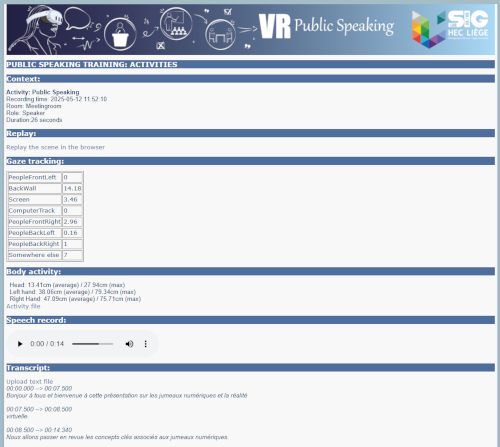

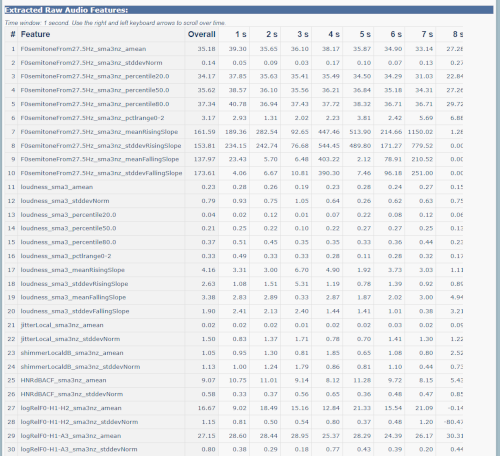

- Advanced feedback. A companion website where you (or your instructor) can upload your slides and notes, watch a 2D replay, and access several key performance indicators.

Master thesis, entrepreneurship student presentation

A crucial step in your training is approaching: defending your master’s thesis in front of a panel of professors. Another possible scenario: you are a student entrepreneur and must pitch your project to your professors acting as "investors". Are you ready? Do you know what to expect?

This module helps you prepare for that moment. You stand in front of your professors with your slides and notes and you can rehearse your presentation. Their reactions may vary greatly, supportive or skeptical… Will you also be able to answer their questions after your talk? These questions will be sharp and directly based on your presentation.

High School classroom

Partners: M-N. Hindryckx, A. Huby, C. Cravatte (Unit for Science Awakening and Biology Didactics)

Virtual reality environment created for our colleagues from the Faculty of Science who are responsible for training future secondary school biology teachers. The trainee teacher is immersed in a virtual secondary school classroom facing around twenty adolescents. The behavior of these students depends on scenarios defined by our colleagues.

This is a multi-user environment. Some of the virtual teenagers can be embodied by members of the teaching staff or by other students wearing VR headsets, while still appearing as teenage students. This allows the audience's behavior to go beyond predefined scenarios, creating a highly realistic situation for the trainee teacher. It also eliminates the typical bias found in role-playing exercises where audience members are known to the participant and are not perceived as actual secondary school students.

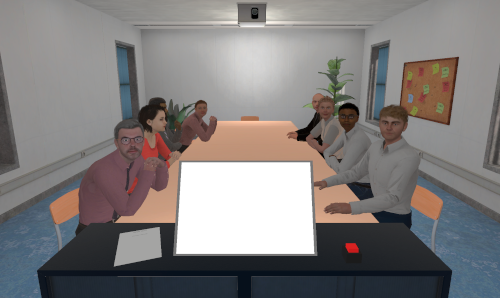

Board room and Meeting room

Whether delivering a progress report, presenting a proposal to a board, pitching a product or service to clients or investors, or leading a lecture or workshop, effective public speaking is a core professional skill. Yet for many, public speaking remains a source of significant anxiety. While traditional training often relies on in-person coaching, VR offers a promising alternative by providing realistic, interactive, and repeatable scenarios within a controlled environment. Our system offers, by default, a range of possible audience attitudes: from supportive and engaged to negative or passive. Artificial intelligence techniques are integrated to allow the audience’s attitude to be influenced in real time based on verbal and non-verbal behaviors.

Courtroom

Partners: Y-H. Leleu (ULiège Faculty of Law) - J.W. Cho, T. Jung (Manchester Metropolitan University)

The famous moot court competition is coming up for our law students! But how many of them have ever set foot in a real courtroom? How many have actually practiced acting as defense counsel? Here is a first opportunity to experience it.

Office - recruitment process

Partners: Faculty of Law and Political Science

A common activity in companies: recruitment. In this module, you can be the candidate undergoing a job interview, but also the recruiter learning the process. If you are the recruiter, will you be influenced by the person sitting across from you?

Auditorium

A variation of public speaking in a meeting room or a classroom: speaking in front of a very large audience in an amphitheater! Keeping such a large audience engaged and not letting yourself be thrown off balance is yet another challenge.